JRDB-PanoTrack 2024

Advancing the state-of-the-art open & closed-world panoptic segmentation and tracking.

Advancing the state-of-the-art open & closed-world panoptic segmentation and tracking.

JRDB-PanoTrack contains new manually-labeled panoptic segmentation and tracking annotations for the entire train and test video set. These annotations include highly detailed 428,000 panoptic segmentation masks and 27,000 mask tracklets. A significant advancement introduced by JRDB-PanoTrack is the inclusion of multi-label annotations. This allows for the detailed labeling of objects that are behind glass or hanging on walls with two distinct classes, thereby marking a significant step forward in the understanding of densely populated human scenes.

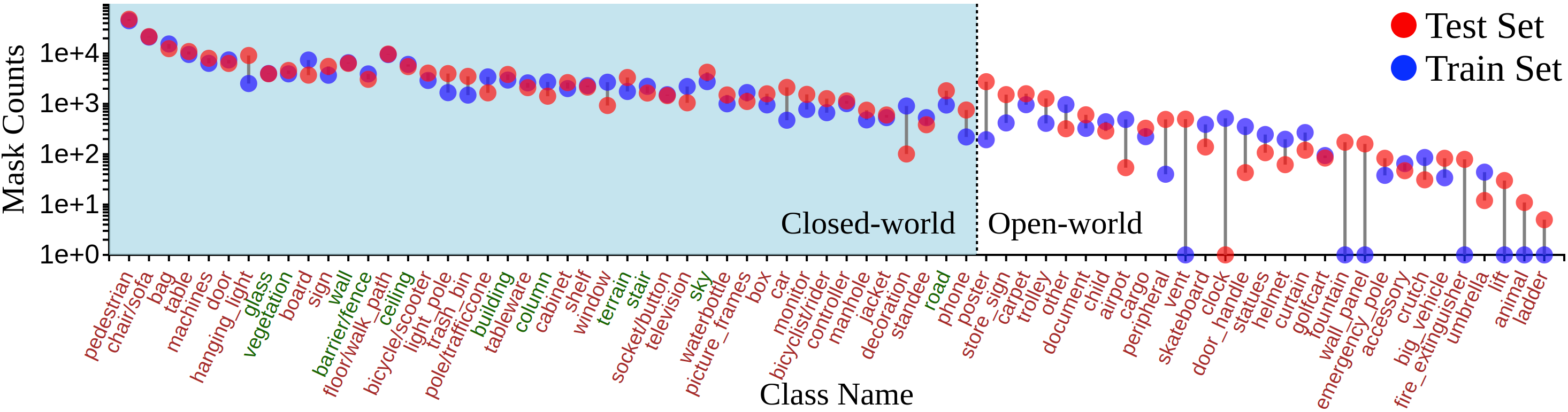

There are 71 object classes in JRDB-PanoTrack, which are classified into 60 thing (such as pedestrians, cars, and laptops) and 11 stuff (like sky and walls) classes. In the figure below, we show all object classes in train and test sets, together with mask counts.

Closed-world settings

1. pedestrian 2. chair/sofa 3. bag 4. table 5. machines 6. door 7. hanging_light 8. glass 9. vegetation 10. board 11. sign 12. wall 13. barrier/fence 14. floor/walking_path 15. ceiling 16. bicycle/scooter 17. light_pole 18. trash_bin 19. pole/trafficcone 20. building 21. tableware 22. column 23. cabinet 24. shelf 25. window 26. terrain 27. stair 28. big_socket/button 29. television 30. sky 31. waterbottle 32. picture_frames 33. box 34. car 35. monitor 36. bicyclist/rider 37. controller 38. manhole 39. jacket 40. decoration 41. standee 42. road 43. phone

Open-world settings

1. poster 2. store_sign 3. carpet 4. trolley 5. other 6. document 7. child 8. airpot 9. cargo 10. peripheral 11. vent 12. skateboard/segway/hoverboard 13. clock 14. door_handle 15. statues 16. helmet 17. curtain 18. golfcart 19. fountain 20. wall_panel 21. emergency_pole 22. accessory 23. crutch 24. big_vehicle 25. fire_extinguisher 26. umbrella 27. lift 28. animal 29. ladder

For each scene, we provide a .json file with the COCO-style annotations dictionary for that

scene. The segmentation data itself is contained in the annotations list, which looks like the

following. Annotations with body pose information use category_id=2.

"categories": [

{'id': 1, 'name': 'road', 'isthing': True},

{'id': 2, 'name': 'terrain', 'isthing': True},

...

],

"images": [

{'id': 1, 'file_name': 'sequence_name/000000.jpg', 'width': ..., 'height': ...},

{'id': 2, 'file_name': 'sequence_name/000001.jpg', 'width': ..., 'height': ...},

...

],

"annotations": [

{

'id': 1,

'image_id': 1,

'category_id': 1,

'segmentation': {

'counts': '...RLE encoded mask...',

'size': [height, width]

},

'bbox': [x, y, w, h],

'area': 10000,

'iscrowd': 0,

'attributes': {

'occluded': False,

'tracking_id': 1, # track id for thing classes only

'floor_id': 1, # floor id if the class is 'floor', we track different floor in JRDB-PanoTrack

},

...

]

These annotations were collected over a period of several months. The annotation process starts with an unlimited list of classes which can be extended by all annotators, any clearly visible and semantically meaningful objects would be annotated. We generally annotate all visible objects in a scene that have bounding box area at least 100 pixels; below this, we find that the objects are too small to be semantically meaningful. While JRDB videos are 15fps, we annotate at 1fps (1 frame per second). These annotations are provided as JRDB-PanoTrack.

Every individual segmentation annotation comes with a tracking_id property, which remains

consistent for the person across the video sequence and accross multiple camera views.

For Panoptic Segmentation (Closed and Open-world), we evaluate the submissions using PQ (Panoptic Quality) and OSPA (OPS) metrics. For Panoptic Tracking, we use STQ (Segmentation Tracking Quality) and OSPA (OPT2) metrics. OSPA and OSPA2 will be the main metrics for the challenge.

There will be 4 tracks in the challenge : Open-world Panoptic Segmentation, Closed-world Panoptic Segmentation, Open-world Panoptic Tracking, and Closed-world Panoptic Tracking. Participants can submit to any or all of the tracks.

All methods are evaluated on stitched images of the entire scene, which are provided in the dataset. Participants are encouraged to train their models on stitched images. However, they can also use the individual camera views for training, we will provide the camera calibration parameters for each camera and the stitching code in the toolkit.

The evaluation toolkit for Panoptic Segmentation and Tracking is released as a part of the JRDB-PanoTrack dataset. In addition to the toolkit, we realeased new calibration and mapping tools for the JRDB dataset.

The toolkit can be found here:

JRDB-PanoTrack ToolkitWe also provide code samples for mapping and projecting the annotations to the 3D world and between individual camera views to the stitched view.

The code samples can be found here:

TutorialsAs a part of our CVP24 challenge, we are releasing the JRDB-PanoTrack dataset:

See Downloads