JackRabbot Dataset and Benchmark (JRDB)

Visual Perception for Navigation in Human Environments

Visual Perception for Navigation in Human Environments

JRDB is a novel dataset collected from our social mobile manipulator, JackRabbot. Our goal is to provide training and benchmarking data for research in the areas of autonomous robot navigation and all perceptual tasks related to social robotics in human environments.

Please note that you are required to log in before downloading JRDB. If you don't have an account, you can sign up for one here.

See Downloads

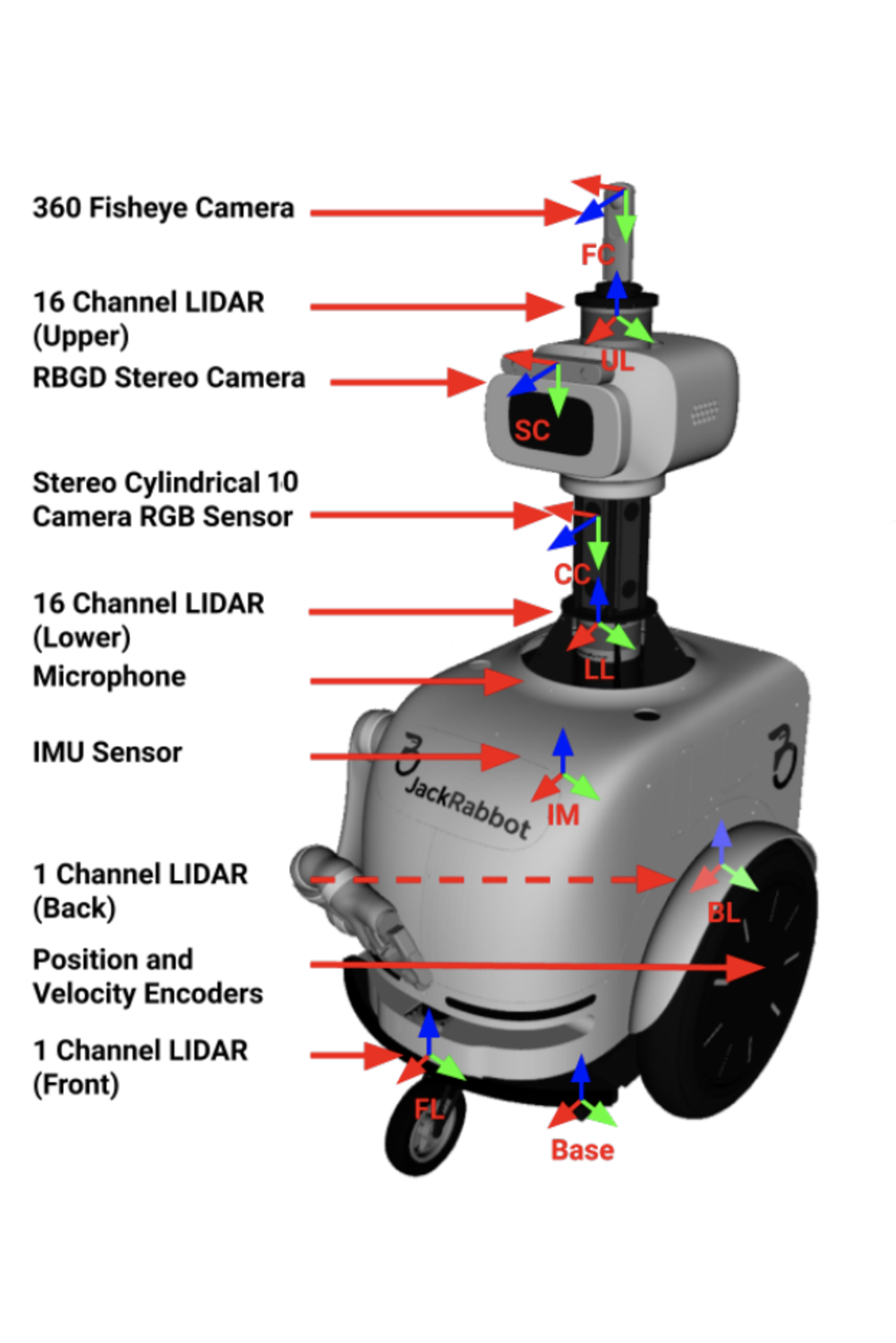

The JackRabbot social mobile manipulator.

November 30, 2023

We will soon be launching our new datasets: JRDB-PanoTrack and JRDB-Social.

March 10, 2023

Our new paper titled "JRDB-Pose: A Large-scale Dataset for Multi-Person Pose Estimation and Tracking" has been accepted to CVPR2023. Find it here

October 10, 2022

We have extended the Pose Estimation Challenge deadline to October 15 AoE (Anywhere on Earth). You can find the submission leaderboard here. We look forward to seeing all of your submissions!

August 26, 2022

2D and 3D test set detections and head bounding boxes for both 2D and stitched images are released. See downloads

August 25, 2022

Visualisation toolkit which supports multiple visualisation settings is published, find it here

August 24, 2022

We excited to launch the challenge leaderboard for JRDB 2022! Check them out the leaderboards here. We're running a challenge for our new dataset, JRDB-Pose, and the winners will get to present their work at our workshop at ECCV 2022.

Jun. 27, 2022

As a part of our ECCV22 workshop, we have released JRDB2022 new annotations including 2D human skeleton pose & head bounding boxes and the improved 2&3D bounding box, action & social grouping. New JRDB22 leaderboards will be launched soon.

March 30, 2022

We will organise a new JRDB workshop in conjunction with ECCV22. Click here for more information

March 2, 2022

The JRDB-ACT paper has been accepted and will presented in CVPR22. Find the paper here

November 26, 2021

JRDB dataset and benchmark has offically moved to Monash University! The previous server at Stanford will shut down soon. All previous submissions have been uploaded by the dataset admins. Please register a new account to make submissions.

The newest iteration of JRDB, featuring all of our video scenes and annotations.

Plase log in to view these downloads.

Duy-Tho Le*, Chenhui Gou*, Stavya Datta, Hengcan Shi, Ian Reid, Jianfei Cai, Hamid Rezatofighi

IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR),

2024.

Citation:

@inproceedings{le2024jrdbpanotrack,

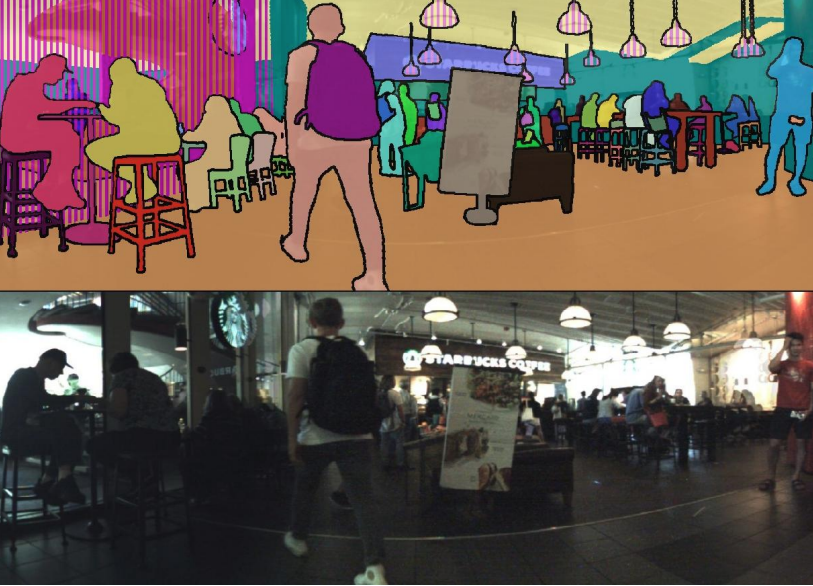

title={JRDB-PanoTrack: An Open-world Panoptic Segmentation and Tracking Robotic Dataset in Crowded Human Environments},

author={Le, Duy-Tho and Gou, Chenhui and Datta, Stavya and Shi, Hengcan and Reid, Ian and Cai, Jianfei and Rezatofighi, Hamid},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

year={2024}

}

Simindokht Jahangard, Zhixi Cai, Shiki Wen, Hamid Rezatofighi

IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR),

2024.

Citation:

@inproceedings{jahangard2024jrdbsocial,

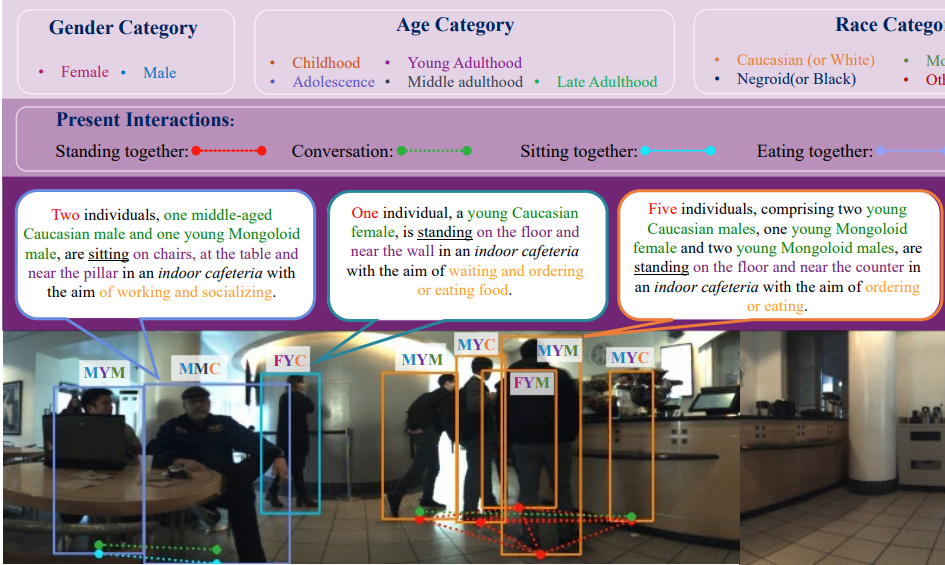

title={JRDB-Social: A Multifaceted Robotic Dataset for Understanding of Context and Dynamics of Human Interactions Within Social Groups},

author={Jahangard, Simindokht and Cai, Zhixi and Wen, Shiki and Rezatofighi, Hamid},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

year={2024}

}

Saeed Saadatnejad, Yang Gao, Hamid Rezatofighi, Alexandre Alahi

arXiv,

2023.

Citation:

@misc{saadatnejad2023jrdb,

title={JRDB-Traj: A Dataset and Benchmark for Trajectory Forecasting in Crowds},

author={Saeed Saadatnejad, Yang Gao, Hamid Rezatofighi, Alexandre Alahi},

year={2023},

eprint={2311.02736},

archivePrefix={arXiv}

}

Edward Vendrow*, Duy-Tho Le*, Jianfei Cai, Hamid Rezatofighi.

IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR),

2023.

Citation:

@inproceedings{vendrow2023jrdb,

title={JRDB-Pose: A Large-scale Dataset for Multi-Person Pose Estimation and Tracking},

author = {Vendrow, Edward and Le, Duy Tho and Cai, Jianfei and Rezatofighi, Hamid},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

year={2023}

}

Mahsa Ehsanpour, Fatemeh Saleh, Silvio Savarese,

Ian Reid, Hamid Rezatofighi.

IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR),

2022.

Citation:

@inproceedings{ehsanpour2022jrdb,

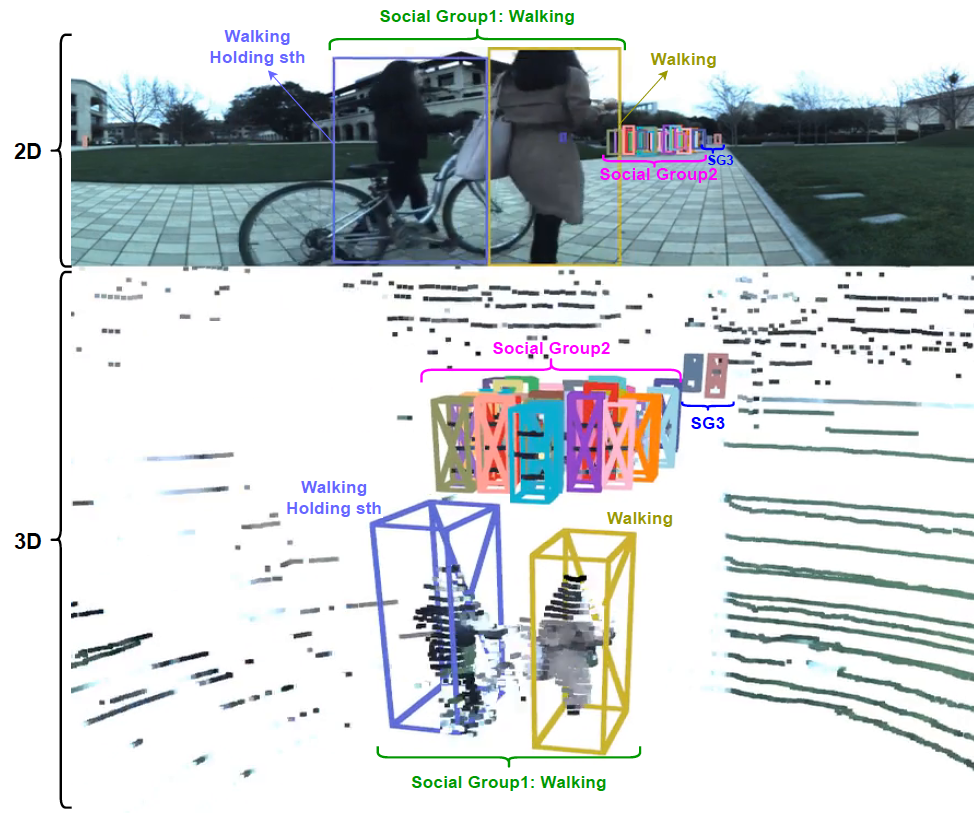

title={JRDB-Act: A Large-Scale Dataset for Spatio-Temporal Action, Social Group and Activity Detection},

author={Ehsanpour, Mahsa and Saleh, Fatemeh and Savarese, Silvio and Reid, Ian and Rezatofighi, Hamid},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

year={2022}

}

Roberto Martín-Martín*, Mihir Patel*, Hamid

Rezatofighi*, Abhijeet Shenoi, JunYoung Gwak, Eric Frankel, Amir Sadeghian, Silvio

Savarese.

IEEE Transactions on Pattern Analysis and Machine

Intelligence (TPAMI), 2021.

Citation:

@article{martin2021jrdb,

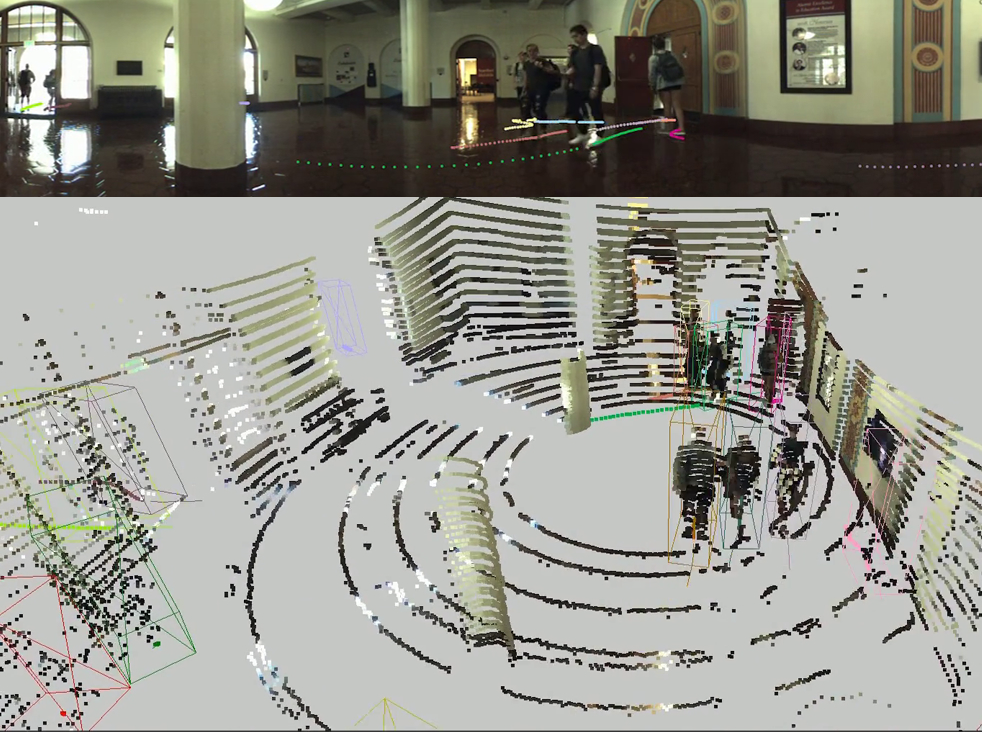

title={Jrdb: A dataset and benchmark of egocentric robot visual perception of humans in built environments},

author={Martin-Martin, Roberto and Patel, Mihir and Rezatofighi, Hamid and Shenoi, Abhijeet and Gwak, JunYoung and Frankel, Eric and Sadeghian, Amir and Savarese, Silvio},

journal={IEEE transactions on pattern analysis and machine intelligence},

year={2021},

publisher={IEEE}

}